AGU fall meeting: Part 3 - Abstract similarities

American Geophysical Union (AGU) meeting is a geoscience conference hold each year around Christmas in San Francisco. It represents a great opportunity for PhD students like me to show off their work and enjoy what the west coast has to offer. However, with nearly 24 000 attendees, AGU Fall Meeting is also the largest Earth and space science meeting in the world. As such, it represents an interesting data set to dive into the geoscience academic world.

In this post, I explore different information retrieval techniques taken from the field of natural language processing to explore the hidden patterns in the submitted abstract collection in 2015.

The objective is two fold:

- Identify semantic-based similarities between the contributions proposed at AGU to build a query-based recommendation system.

- Propose for each query a list of potential collaborators based on the authors of the papers proposed by the recommendation system.

For those who want to test the final recommendation system before understanding how it works, a version is available for testing through binder here.

Different natural language processing tools are available in python to achieve this goal and after trying sklearn, I decided to settle on gensim which has particularly fast implementations to work with large data set (~20000 abstracts here).

The basic stage, which I’ll detail in the following are

- Cleaning the data.

- Construct a valid embedding for the corpus.

- Compute the similarities between the document within this embedding.

- Not satisfied ? Go back to step 2 and reiterate

Data cleaning

Data cleaning is an essential step for a good recommendation system. Indeed, our model is going to use the cleaned corpus to build a consistent embedding of the abstracts and we do not want him to focus on unnecessary details. Among others, I used the module unicodedata to remove all non-ascii characters from the corpus.

data = get_all_data('agu2015')

sources = [df for df in data if (''.join(df.title) != "") and (df.abstract != '') and (len(df.abstract.split(' '))>100)]

abstracts = get_clean_abstracts(sources)

titles = get_clean_titles(sources)In the following, I’ll use one of my contributions to evaluate the consistency of our recommendation system.

def name_to_idx(sources,name):

''' From an authors, return the list of contributions '''

contrib = [f for f in sources if name in f.authors.keys()]

return [sources.index(elt) for elt in contrib]

my_contrib = name_to_idx(sources,'Clement Thorey')

print 'Title : %s'%(titles[my_contrib[0]])

print 'Abstract : %s'%(abstracts[my_contrib[0]])+'\n\n'Title : Floor-Fractured Craters through Machine Learning Methods

Abstract : Floor-fractured craters are impact craters that have

undergone post impact deformations. They are characterized by

shallow floors with a plate-like or convex appearance, wide floor

moats, and radial, concentric, and polygonal

floor-fractures. While the origin of these deformations has long

been debated, it is now generally accepted that they are the

result of the emplacement of shallow magmatic intrusions below

their floor. These craters thus constitute an efficient tool to

probe the importance of intrusive magmatism from the lunar

surface. The most recent catalog of lunar-floor fractured craters

references about 200 of them, mainly located around the lunar

maria Herein, we will discuss the possibility of using machine

learning algorithms to try to detect new floor-fractured craters

on the Moon among the 60000 craters referenced in the most recent

catalogs. In particular, we will use the gravity field provided by

the Gravity Recovery and Interior Laboratory (GRAIL) mission, and

the topographic dataset obtained from the Lunar Orbiter Laser

Altimeter (LOLA) instrument to design a set of representative

features for each crater. We will then discuss the possibility to

design a binary supervised classifier, based on these features, to

discriminate between the presence or absence of crater-centered

intrusion below a specific crater. First predictions from

different classifier in terms of their accuracy and uncertainty

will be presented.

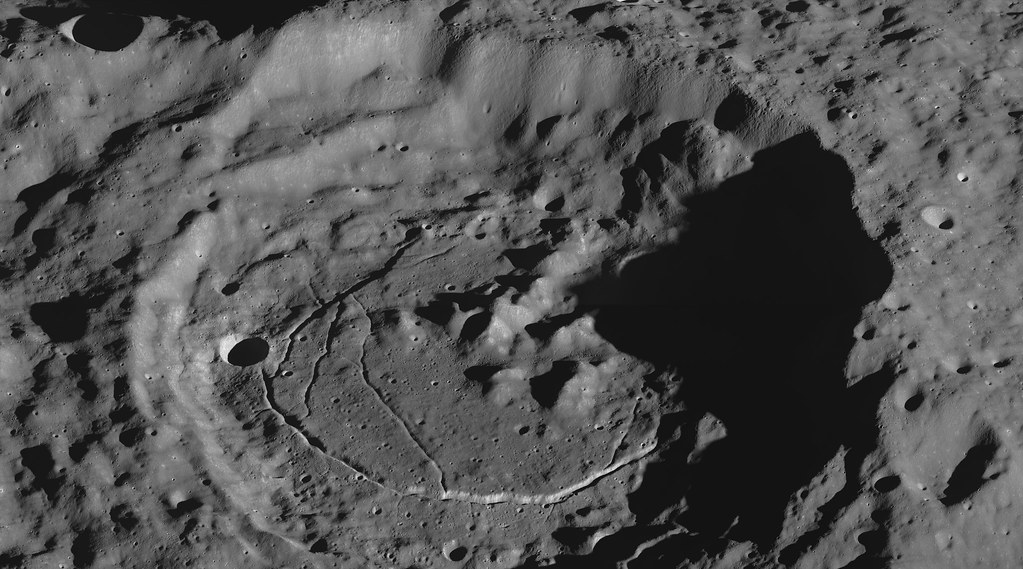

May be a bit of context can be useful here. My PhD was about the detection and the characterization of magmatic intrusions on terrestrial planets with a special focus on the Moon. For those who wonder, a magmatic intrusion is a large volume of magma which, instead of rising until the surface and spreading lava everywhere (a volcano is a good example of that category), stalls at depth beneath the surface (less than a few km) where it cools and solidifies. On Earth, erosion and weathering can sometimes expose these intrusions at the surface. This is the case for instance in the Henry mountains where a few laccoliths, i.e. a solidified magmatic intrusion rocks, stands in the landscape as shown in the picture.

My contribution at AGU deals with the detection of lunar floor-fractured craters which are preferential sites for magmatic intrusions in the lunar crust. Indeed, those craters are initial impact craters that have been heavily deformed following the emplacement of large magmatic intrusions (a few tens of km$^3$) below their floor. The resulting crater structures are abnormally shallow and crossed by important fracture networks. In this contribution, I discuss the possibility to use machine learning techniques to try to automatically detect potential floor-fractured craters among 60000 referenced lunar impact craters (For more detailed, the poster is available here).

Bag of Words model

The base representation for a corpus of text document is called a Bag of Word (BoW). This BoW model looks at all the words in the corpus and first build a dictionary referencing all the words (or tokens) it has seen by an index. Then, for each document in the corpus, it simply counts how many times each token in the dictionary appears in this particular document. The result is a large matrix of count where each row is a token from the dictionary and each column is a particular document of the corpus. As you can guess, the matrix is mostly filled with zeros.

Tokenizer

Under the hood, the BoW model assumes an efficient tokenizer function which is able to split each document it its own set of tokens. A vanilla tokenizer function looks like this

def tokenizer(doc):

return doc.split(' ')which simply looks at a document and splits it in a list of tokens

according to the white spaces occurring in the text. In the following,

I will use a slightly more evolved version of this tokenizer which I

embedded in a Tokenizer class.

class Tokenizer(object):

''' Class to handle the tokenization of a document.

parameter:

add_bigram (Boolean): Add the possibility to add bigram to the resulting

list of tokens

'''

def __init__(self, add_bigram):

self.add_bigram = add_bigram

self.stopwords = get_stop_words('english')

self.stopwords += [u's', u't', u'can', u'will', u'just', u'don', u'now']

self.stemmer = Stemmer.Stemmer('english')

def bigram(self, tokens):

if len(tokens) > 1:

for i in range(0, len(tokens) - 1):

yield tokens[i] + '_' + tokens[i + 1]

def tokenize_and_stem(self, text):

tokens = list(gensim.utils.tokenize(text))

filtered_tokens = []

bad_tokens = []

# filter out any tokens not containing letters (e.g., numeric tokens, raw

# punctuation)

for token in tokens:

if re.search('(^[a-z]+$|^[a-z][\d]$|^[a-z]\d[a-z]$|^[a-z]{3}[a-z]*-[a-z]*$)', token):

filtered_tokens.append(token)

else:

bad_tokens.append(token)

filtered_tokens = [

token for token in filtered_tokens if token not in self.stopwords]

stems = map(self.stemmer.stemWord, filtered_tokens)

if self.add_bigram:

stems += [f for f in self.bigram(stems)]

return map(str, stems)The core of this tokenizer class lies within the tokenize_and_stem

function. This function uses the gensim

library to first break each document into elementary tokens. Next,

using simple regular expressions, it keeps only suitable tokens and

remove all the others.

In particular,

^[a-z]+$keeps only words made of letters.^[a-z][\d]$selects tokens that have 2 characters, one letter, one number (molecule stuff).^[a-z][\d][a-z]$selects tokens that have 3 characters, one letter, one number, one letter (again molecule stuff).^[a-z]{3}[a-z]*-[a-z]*$includes some tokens that are composed of two words joined by -.

Next, I use a stopword list provided by the stop_words module to filter out all the common tokens of the English language. Indeed, while words like ‘the’ or ‘as’ are most likely to be present everywhere, they do not carry meaningful information in our purpose. Finally, I also incorporates a last stage of stemming for each token. Stemming is the term used in information retrieval to describe the process of reducing words to their word stem, base or root form—generally a written word form.

For instance, imagine this document

“Here we show that running is good for health. Indeed runner are quite healthy. Though they have run a lot in their runly life, they are quite good at that.”

Clearly, this document is all about running! Nevertheless, without the stemming part in our tokenizer, ‘runly’ will have the same weight (count) than ‘good’, equal to 1. In contrast, the stemming will reduce ‘running’, ‘runned’, ‘runly’ and ‘runner’ to their stem, namely ‘run’. The word ‘run’ in the BoW model will then have a weight of 4 for this document clearly underlying its importance! I use the so-called SnowballStemmer included in the library PyStemmer for stemming.

Note that the last part of the function also allows the possibility to incorporate bi-grams in the final list of tokens, i.e. all combinations of two consecutive stem-words in the document which is a common practice when using the BoW model.

Dictionnary

From there, we need to build a dictionary of all possible tokens in the corpus. Gensim is built in a memory-friendly fashion. Therefore, instead of loading the whole corpus into memory, tokenizing and stemming everything and see what remains, it allows us to build the dictionary document by document, with one document in memory at a time.

# Path to work in

abstractf = PATH_TO_SAVE_THE_DIFFERENT_FILES

# First, write the document corpus on a txt file, one document per line.

write_clean_corpus(abstracts,abstractf+'_data.txt')

# Create the tokenizer class

tokeniser = Tokenizer(add_bigram = False)

# Next create the dictionary by iterating over the abstracts, one per line in the txt file

dictionary = corpora.Dictionary(tokenizer.tokenize_and_stem(line) for line in open(abstractf+'_data.txt'))

dictionary.save(abstractf+'_raw.dict')The resulting dictionary contains 21723 tokens. While we could work out a BoW model from there, it is often a good idea to remove extreme tokens. For instance, a token appearing in only 1 abstract is not going to help us build a efficient recommendation system. Similarly, a token that appears in all the documents is not likely to carry relevant information neither for our purpose. I therefore decided to remove all tokens that appear in less than 5 abstracts and in more than 80% of them. Note that creating the dictionary can take up to 10 minute on my laptop which make serialization a good idea.

dictionary = corpora.Dictionary.load(abstractf+'_raw.dict')

dictionary.filter_extremes(no_below=5,no_above=0.80,keep_n=200000)

dictionary.id2token = {k:v for v,k in dictionary.token2id.iteritems()}

dictionary.save(abstractf+'.dict')BoW representation

Now we have the dictionary, it is actually easy to obtain the BoW representation of any document. We just have to tokenize the document using the same function used to build the dictionary and count the occurrences of each resulting token. Each dictionary in gensim possess a method doc2bow which does exactly that and returns the BoW representation as a sparse vector, i.e. a vector where only words that have a count different from zero are returned.

For instance, the BoW representation of my first abstract is

my_contrib_bow = dictionary.doc2bow(tokenizer.tokenize_and_stem(abstracts[my_contrib[0]]))

df = [f+(dictionary.id2token[f[0]],) for f in my_contrib_bow]

df = pd.DataFrame(df,columns = ['id','occ','token']).sort_values(by='occ',ascending = False)

df.index= range(len(df))

df.head(5)where the result are presented as a pandas dataframe for clarity with

three columns, the id assigned for each token by the Dictionnary

class, its count and its corresponding token. Note that the BoW

representation of my abstract, which underlies the importance of the

stem token crater, lunar, intrusion, floor and classifi, is farely

accurate.

By converting each abstract of the corpus using this doc2bow method,

we can obtain the BoW representation of our full corpus. A careless

memory way to do that is to just iterate the doc2bow method of our

dictionary over the abstract list we have defined at the

beginning. Nevertheless, this would end up storing the whole doc2bow

representation into memory as a huge matrice which can be problematic,

as least for my laptop.

Instead, gensim has been designed such that it only requires that

a corpus must be able to return one document vector (for instance, the

doc2bow representation of the document here) at a time. We then

define the BoW corpus as an object MyCorpus where the method

__iter__ is consistently defined to iterate and transform each line of

a .txt file where the abstracts content is stored.

class MyCorpus(Tokenizer):

def __init__(self, name, add_bigram):

super(MyCorpus, self).__init__(add_bigram)

self.name = name

self.load_dict()

def load_dict(self):

if not os.path.isfile(self.name + '.dict'):

print 'You should build the dictionary first !'

else:

setattr(self, 'dictionary',

corpora.Dictionary.load(self.name + '.dict'))

def __iter__(self):

for line in open(self.name + '_data.txt'):

# assume there's one document per line, tokens separated by

# whitespace

yield self.dictionary.doc2bow(self.tokenize_and_stem(line))

bow_corpus = MyCorpus(abstractf)

corpora.MmCorpus.serialize(abstractf+'bow_.mm',bow_corpus)Recommendation

In the BoW representation of our corpus, each abstract is a point in a high-dimensional embedding (a 8430 dimensions embedding exactly). The distance or the similarity between one abstract and the rest of the corpus, according to some metrics, can then be used to compare different contributions together and then, to provide a recommendation list for a specific query.

The euclidean distance is always a first natural choice to design a distance in an arbitrary space. Given two vectors $\vec{a}$ and $\vec{b}$, it is equal to

\[d(\vec{a},\vec{b}) = \sqrt{(\vec{b}- \vec{a})\cdot(\vec{b}- \vec{a}) }\]However, we’d like our distance to be independent of the magnitude of the difference between two vectors. For instance, we’d like to identify as similar two abstracts which contain exactly the same tokens even if their occurrence differs significantly. The euclidean distance clearly does not have this property.

Accordingly, a more reliable measure for our purpose is called “the cosine similarity”. For two vectors, $\vec{a}$ and $\vec{b}$, the cosine similarity $d$ is defined as :

\[d(\vec{a},\vec{b})= \frac{\vec{a} \cdot \vec{b}}{|\vec{a}||\vec{b}|} = \cos(\vec{a},\vec{b})\]In particular, this similarity measure is the dot product of the two normalized vector and hence, depends only on the angle between the two vectors (which is were its name comes from ;). It ranges from -1 when two vectors point in the opposite direction to 1 when they point in the same direction.

To compute the similarity of one query against our BoW representation, the natural procedure is to first transform our sparse representation into its dense equivalent, i.e. a matrix where the number of lines correspond to the number of tokens in the dictionary and the number of columns to the number of abstracts in the corpus. Then, we column normalize the matrix such that each document correspond to a unit vector in the representation space. Finally, we take the dot product of the transposed matrix with the desired normalized query to get its cosine similarity against all the documents in the corpus.

Gensim contains efficient utility functions to help converting from/to numpy matrix and therefore, this translates to

def get_score(doc_id):

# First load the corpus and the dicitonary

bow_corpus = corpora.MmCorpus(abstractf+'.mm')

dictionary = corpora.Dictionary.load(abstractf+'.dict')

# Transform our sparse representation into dense

numpy_matrix = gensim.matutils.corpus2dense(bow_corpus, num_terms=len(dictionary))

# Normalize each abstract in the corpus

normalized_matrix = numpy_matrix/np.sqrt(np.sum(numpy_matrix*numpy_matrix,axis=0))

# Take the dot product of the resulting matrice with query to get the relevant cosine similarity

return np.dot(normalized_matrix.T,normalized_matrix[:,doc_id])The recommendation against my abstracts and their associate cosine-similarity are

Recom 1 - Cosine: 1.000 - Title: Floor-Fractured Craters through Machine Learning Methods

Recom 2 - Cosine: 0.563 - Title: Structural and Geological Interpretation of Posidonius Crater on the Moon

Recom 3 - Cosine: 0.482 - Title: Preliminary Geological Map of the Ac-H-2 Coniraya Quadrangle of Ceres An Integrated Mapping Study Using Dawn Spacecraft Data

Recom 4 - Cosine: 0.448 - Title: The collisional history of dwarf planet Ceres revealed by Dawn

Recom 5 - Cosine: 0.435 - Title: Initial Results from a Global Database of Mercurian Craters

Recom 6 - Cosine: 0.416 - Title: Morphologic Analysis of Lunar Craters in the Simple-to-Complex Transition

In particular, the cosine similarity of the query against itself returns 1, which in itself is reassuring! The cosine-similarity then drops below 0.6. While the recommendations are fairly accurate, I have effectively been most of this presentations, we can get a slight increase of the score using a trick called tf-idf normalization.

TF-IDF representation

Indeed, one of the problem with the BoW representation is that it often puts too much weights on common words of the corpus. While we remove most common words of the English language, words like ‘present’, ‘show’ of whatever words commonly used in the writing-abstract vocabulary can add some noise in regards to our recommendation. In particular here, we would like to put more weights on tokens that make each abstract specific.

A common way to do this is to use a Tf-Idf normalization to re-weight each count in the BoW representation by the frequency of the token in the whole corpus. Tf means term-frequency while Tf–Idf means term-frequency times inverse document-frequency. This way, the weight of common tokens in the corpus will be significantly lowered.

This implementation is available is gensim and can be easily combined with the BoW representation to get the representation of the corpus in the tf-idf space.

# First load the corpus and the dicitonary

bow_corpus = corpora.MmCorpus(abstractf+'.mm')

dictionary = corpora.Dictionary.load(abstractf+'.dict')

# Initialize the tf-idf model

tfidf = models.TfidfModel(bow_corpus)

# Compute the tfidf of the corpus itself

tfidf_corpus = tfidf[bow_corpus]

# Serialize both for reuse

tfidf.save(abstractf+'_tfidf.model')

corpora.MmCorpus.serialize(abstractf+'_tfidf.mm',tfidf_corpus)In this embedding, the cosine similarity of my abstract agaisnt the remaining of the corpus gives

Recom 1 - Cosine: 1.000 - Title: Floor-Fractured Craters through Machine Learning Methods

Recom 2 - Cosine: 0.654 - Title: Structural and Geological Interpretation of Posidonius Crater on the Moon

Recom 3 - Cosine: 0.509 - Title: The collisional history of dwarf planet Ceres revealed by Dawn

Recom 4 - Cosine: 0.506 - Title: Preliminary Geological Map of the Ac-H-2 Coniraya Quadrangle of Ceres An Integrated Mapping Study Using Dawn Spacecraft Data

Recom 5 - Cosine: 0.495 - Title: Hydrological Evolution and Chemical Structure of the Hyper-acidic Spring-lake System on White Island, New Zealand

Recom 6 - Cosine: 0.484 - Title: Initial Results from a Global Database of Mercurian Craters

While the cosine-similarity is indeed slightly better, it still does passes above 0.5. One of the reason might be the number of dimension of the representation space. Indeed, both in the BoW and Tf-Idf model, we are trying to calculate distance, similarity, in a very high dimensional space. One problem with a such huge number of dimension is that similarity measure begins to becomes all similar in such high embedding. A gain of performance could surely be obtained by trying to reduce the size of the representation space.

Latent Semantic Analysis (LSA) or (LSI)

And here comes Latent Semantic Analysis (LSA) or Indexing (LSI). LSI is a common method in information retrieval to reduce the dimension of the representation space. The idea behind it is that a lot of the dimensions in the previous representations are redundant. For instance, the words machine and learning are more likely to occur together. Therefore, shrinking these two dimensions to only one which is form by a linear combination of the token machine and learning would reduce the dimension without any loss of information. More generally, the Latent Semantic Analysis aims to reduce the dimensions while keeping as much information possible present in the higher dimensional space by identifying deep semantic pattern in the corpus.

To identify this semantic structure, Latent Semantic Analysis used a linear algebra method called Singular Value Decomposition (SVD). More formally, we know that the tf-idf representation can be written as a huge matrix $X$ where each line corresponds to a token in the dictionary and each column corresponds to a document. Its size is (T,N) where T is the number of tokens and N the number of abstracts.

The maths behind LSI factorizes the matrix $X$ as

\[X = UDV^T\]where $U$ is a unitary matrix, i.e. formed by orthogonal unit norm vectors, of size (T,T), $D$ is a diagonal rectangular matrix of size (T,N) and $V$ is also a unitary matrix of size (N,N). The vectors in $U$ are called left eigenvectors, the vectors in $V$ are called right eigenvectors and the matrix $D$ is composed by their corresponding eigenvalues.

Particularly, this factorization has a nice property regarding the matrix containing all the cross-documents dot-product $X^TX$. Indeed,

\[X^TX = (UDV^T)^T(UDV^T) = (VD^TU^T)(UDV^T) = VD^TDV^T\]and therefore, the orthonormal vectors in $V$ can be seen as the eigenvectors of the cross-document correlation matrix $X^TX$. As D is diagonal, $D^TD$ is just composed by the eigenvalues squared and therefore, these eigenvalues can be used as a direct proxy to evaluate the variance in the cross-document correlation matrix.

A rank $k$ approximation of $X$ can be obtain by

\[X_k = U_kD_kV^T_k\]where $U_k$ and $V_k$ are the matrices $U$ and $V$ where we kept only the $k$ first eigenvectors, i.e size (T,K) and (N,K) respectively and $D_k$ is a squared matrix of size (K,K) which contains the k first eigenvalues of the diagonal. More importantly, $V_kD^T_k$ gives us a the new representation, called LSI space, where each document is characterized by k features. The SVD is thus able to identify a consistent lower-dimensional approximation of the higher-dimensional tfidf space.

Gensim implements the Latent Semantic Analysis under a model

called LsiModel which can be used on top of our previous

representation easily. It requires a parameter, num_topics, which

corresponds to the desired dimension in the final lsi space. I settle

on num_topics=500 for good performance.

# First load the corpus and the dicitonary

tfidf_corpus = corpora.MmCorpus(abstractf+'_tfidf.mm')

dictionary = corpora.Dictionary.load(abstractf+'.dict')

# Initialize the lsi model

lsi = models.LsiModel(tfidf_corpus,id2word=dictionary, num_topics=500)

# Compute the lsi of the corpus itself

lsi_corpus = lsi[tfidf_corpus]

# Serialize both for reuse

lsi.save(abstractf+'_lsi.model')

corpora.MmCorpus.serialize(abstractf+'_lsi.mm',lsi_corpus)where we used $k=500$ here. The matrix $U$ and $D$ can be easily extracted from the model and we verify that the return lsi_corpus correspond to $V_kD_k^T$.

V = gensim.matutils.corpus2dense(lsi_corpus, len(lsi.projection.s)).T / lsi.projection.s

lsi_corpus_dense = gensim.matutils.corpus2dense(lsi_corpus,len(lsi.projection.s))

np.allclose(np.dot(V,np.diag(lsi.projection.s)),lsi_corpus_dense.T)return True. We can also verify that we pick up a sufficient order

for the approximation by plotting the eigenvalues of the SVD which

range in a decreasing order.

Most of the information is indeed contained in the first 100 dimensions of our new representation space.

Finally, in this lsi space, the cosine similarity looks much more promising !

Recom 1 - Cosine: 1.000 - Title: Floor-Fractured Craters through Machine Learning Methods

Recom 2 - Cosine: 0.857 - Title: Structural and Geological Interpretation of Posidonius Crater on the Moon

Recom 3 - Cosine: 0.823 - Title: Lunar Crater Interiors with High Circular Polarization Signatures

Recom 4 - Cosine: 0.809 - Title: The collisional history of dwarf planet Ceres revealed by Dawn

Recom 5 - Cosine: 0.797 - Title: An Ice-rich Mantle on Ceres from Dawn Mapping of Central Pit and Peak Crater Morphologies

Recom 6 - Cosine: 0.795 - Title: Morphologic Analysis of Lunar Craters in the Simple-to-Complex Transition

Visualization with the lsi-space

The lsi reduction process identify latent semantic in the structure to effectively reduce the dimension of the representation. A first way to look at these semantic structure is to look at the matrix $U$ directly which expressed the coordinates of the eigenvectors in terms of the dictionary tokens.

# Define a function which returns the first words contributions ranks

#by their coefficient in the k eigenvectors (0<k<500)

def print_n_topics(n,k):

k -=1

words = map(lambda x:dictionary.id2token[x],list(np.argsort(lsi.projection.u[:,k])[::-1])[:10])

coeff = ['%1.3f'%(f) for f in list(np.sort(lsi.projection.u[:,k])[::-1])[:10]]

return reduce(lambda x,y: y+' + '+x, [g+'x'+f for f,g in zip(words,coeff)][::-1])For instance, the first eigenvalue, which explains most of the information contains in the corpus, is associated with the following eigenvector

u'0.119xmodel + 0.112xwater + 0.105xclimat + 0.098xsoil + 0.096xdata + 0.094xchang + 0.091xice + 0.088xsurfac + 0.080xregion + 0.080xtemperatur'

It clearly deals with global atmospheric models which, as we have seen in the previous post, in a very important topics in the 2015 conference. Nevertheless, the linear combination is not always straightforward. For instance, the 42nd eigenvectors looks like

u'0.254xstorm + 0.192xionospher + 0.142xflux + 0.133xglacier + 0.123xheat + 0.123xaerosol + 0.107xgeomagnet + 0.103xforest + 0.103xal + 0.103xet'

which links aerosols with glacier and ionospher… $

As a better idea, I use t-sne, an efficient method to visualize high dimensional space in 2D. The intuition behind t-sne is a method which embeds the 500 high-dimensional vectors lsi space into a low-dimensional space (here 2D) while trying to preserve the cosine distances between two points. In short, points that are close in the lsi-space should be close on the 2D plot regarding cosine similarity measure. I associated to each point in the t-sne representation a color by section. I also associate different sections with closely related topics to similar colors.

The result looks very nice. While we have not entirely reconstructed the higher structural hierarchy of the conference (the sections), clearly some pattern are standing out. In particular,

- Space Physics and Aeronomy (SPA) together with planetary science forms a nice cluster on the top right of the representation.

- Atmospheric science cluster on the top

- Education, Union and Public affaire form cluster is a very elegant way on the bottom left.

- Biogeoscience occupy the left hand side of the representation

- Seismology/Technophysics cluster on the bottom right

- Volcanology, Natural hazards and the study of the Earth deep interior cluster on the right

- Non-linear geophysics, which apply to lot different topics naturally clusters at the center.

This show us the strenght of the method used in this post to identify deep hidden structure in the corpus of text composed by the abstracts of the conference. This also supports our recommendation system.

Recommandation and potential collaborator search

One thing I found particularly hard in my first AGU was not to get lost in the flow of presentation. Indeed, in such a conference, one has to find the good trade-off between going everywhere to see everything and going nowhere to see anything. Follow the first option and you will most likely get rapidly saturated by information. Follow the second option and, while you will have plenty of time to do sight-seeing in San Francisco, the geoscience academic world might let you behind ;).

While there is still a lot of space for improvement here, the recommendation system we design could be very useful. It could be particularly useful for newcomers that are not really at ease with searching the program for hours in the hope to find something closely related to what they do during their PhD. In addition, as you can see on the t-sne representation, the recommendation system we develop here does not strictly follows the hierarchy proposed by the AGU conveners and get you in touch with some presentations far from your original field but, still, very close in their respective content, presentation that you might not have hear off without the strength of Latent Semantic Indexing ;)

The final wrapper for our recommendation system looks like

class RecomendationSystem(object):

def __init__(self, path_model):

self.model_saved = path_model

self.abstractf = os.path.join(self.model_saved, 'abstract')

# Load the titles and abstract + links

self.sources = pd.read_csv(os.path.join(self.abstractf + '_sources.txt'),

names=['title', 'link'])

self.titles = self.sources.title.tolist()

self.links = self.sources.link.tolist()

# Load the necessary models, the lsi corpus and the corresponding index

self.tokeniser = Tokenizer(False)

self.dictionary = corpora.Dictionary.load(self.abstractf + '.dict')

self.tfidf = models.TfidfModel.load(self.abstractf + '_tfidf.model')

self.lsi = models.LsiModel.load(self.abstractf + '_lsi.model')

self.corpus = corpora.MmCorpus(self.abstractf + '_lsi.mm')

self.index = similarities.MatrixSimilarity.load(

self.abstractf + '_lsi.index')

def transform_query(self, query):

# Transform the query in lsi space

# Transform in the bow representation space

vec_bow = self.dictionary.doc2bow(

self.tokeniser.tokenize_and_stem(query))

# Transform in the tfidf representation space

vec_tfidf = self.tfidf[vec_bow]

# Transform in the lsi representation space

vec_lsi = self.lsi[vec_tfidf]

return vec_lsi

def recomendation(self, query):

vec_lsi = self.transform_query(query)

# Get the cosine similarity of the query against all the abstracts

cosine = self.index[vec_lsi]

# Sort them and return a nice dataframe

results = pd.DataFrame(np.stack((np.sort(cosine)[::-1],

np.array(self.titles)[

np.argsort(cosine)[::-1]],

np.array(self.links)[np.argsort(cosine)[::-1]])).T,

columns=['CosineSimilarity', 'title', 'link'])

return results

def get_recomendation(self, query, n):

df = self.recomendation(query).head(n)

for i, row in df.iterrows():

print 'The %d recomendation, cosine sililarity of %1.3f is ' % (i + 1, float(row.CosineSimilarity))

print ' %s' % (row.title)

print '%s \n' % (row.link)Note that this recommendation class can adapt to, not only the

abstracts in the corpus, but to any possible queries. The only thing

to make sure, and which is taken care by the method transform_query,

is to appropriately transform the query in the corresponding lsi

space. In this wrapper, we also use some utility of gensim which

allows us to rapidly compute the similarity of our query against all

abstract in the corpus (more details on the gensim

tutorial).

The second aim of this project was to bring up a wrapper that could propose a list of collaborator/researcher in the field, relative to a specific query. Now, we have a recommendation system, we can easily build up this feature on top of it using the data collected in the first post on each contributor. The full version is available here and a live demo is available through binder here. For instance, for my first contributions and based only on 1 abstracts return

CLEMENT THOREY from the Institut de Physique du Globe de Paris, FRANCE

Based on his/her abstract untitled

Floor-Fractured Craters through Machine Learning Methods

https://agu.confex.com/agu/fm15/meetingapp.cgi/Paper/67077

only myself, as expected !

Conclusion

This post concludes a compilation of three different posts on some data I have collected on the American Geophysical Union (AGU) fall meeting hold each year around Christmas in San Francisco. This post exposes the final part of this project that aims to build an efficient abstract and potential collaborator recommendation system based on the abstract corpus of the conference.

To achieve this goal, we review the different ways to transform a corpus of documents into a consistent mathematical representation, i.e. an embedding where each document is a point in a high dimensional space. Such embedding simplifies the task of recommendation to the calculation of the cosine-similarity between these highly-dimensional points.

We settle on a recommendation system based on a 500 LSI space which gives very intuitive results according to the 2D t-sne representation of the representation space. The next-step might be to build an application on top of this python back-end and may-be, propose such system for the next conference in 2016 ; )!